Swabha Swayamdipta

Gabilan Assistant Professor • USC Viterbi CS • Associate Director of USC Center for AI and Society • Amazon Scholar • USC NLP

My goal is to design frameworks that allow robust, and reliable frameworks that allow AI systems to be broadly and safely deployed, especially in applications with societal implications. Three directions that this corresponds to are:

-

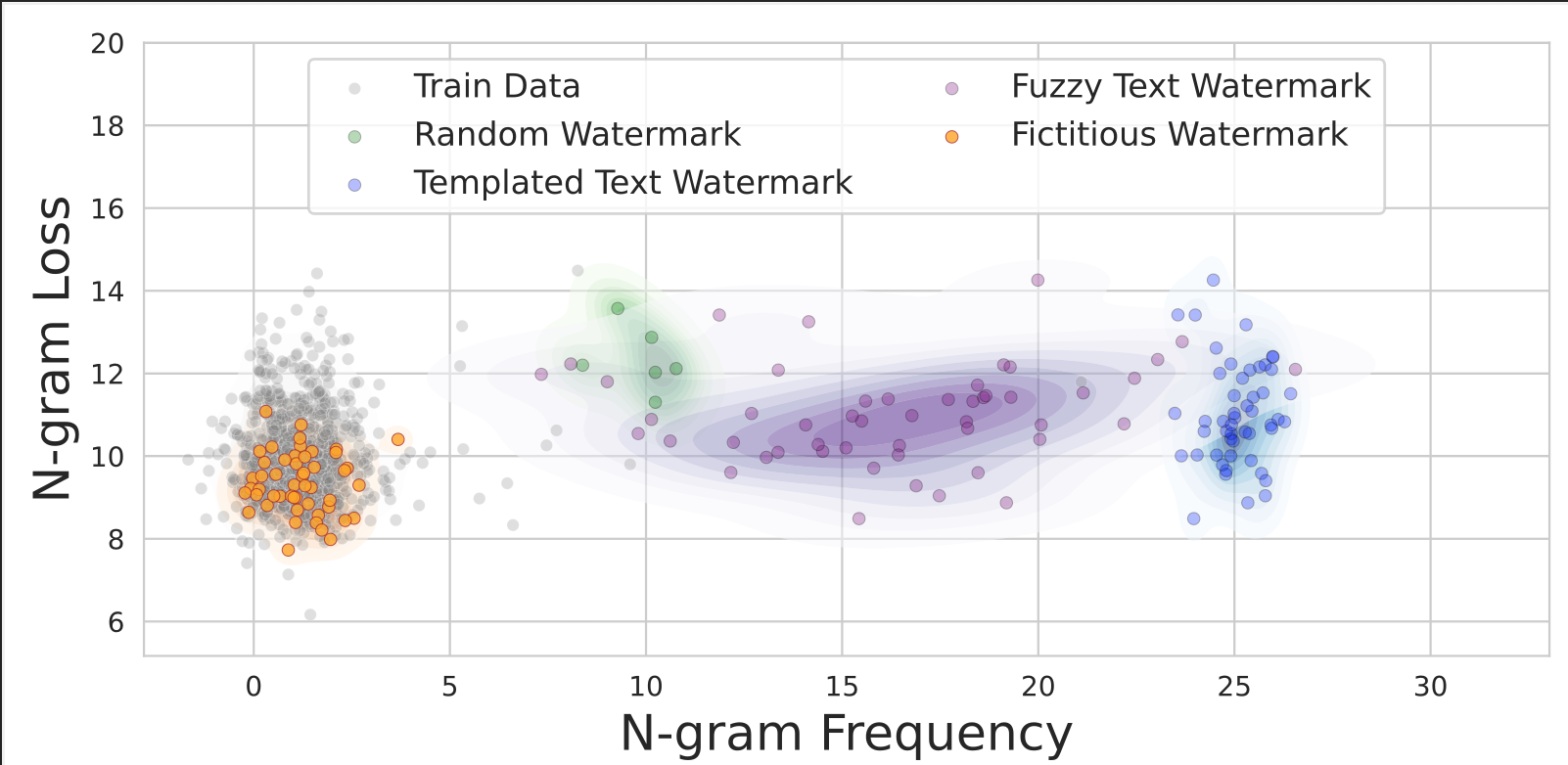

- Safety-Critical, Robust and Reliable Frameworks for Evaluation:

- What cannot be measured, cannot be improved. How can we reliably compare the generative capabilities of language models, and ensure our assessment is robust? How can we tell if performance match can translate to application safety, especially when there are societal implications? How can we evaluate new capabilities in LLMs when we do not necessarily know the correct answer?

-

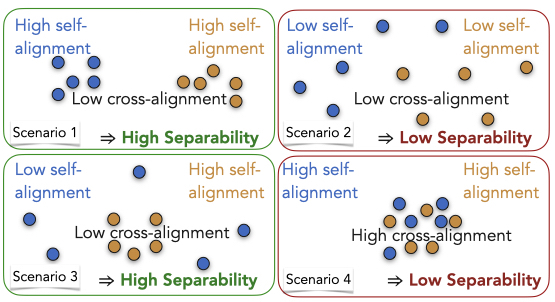

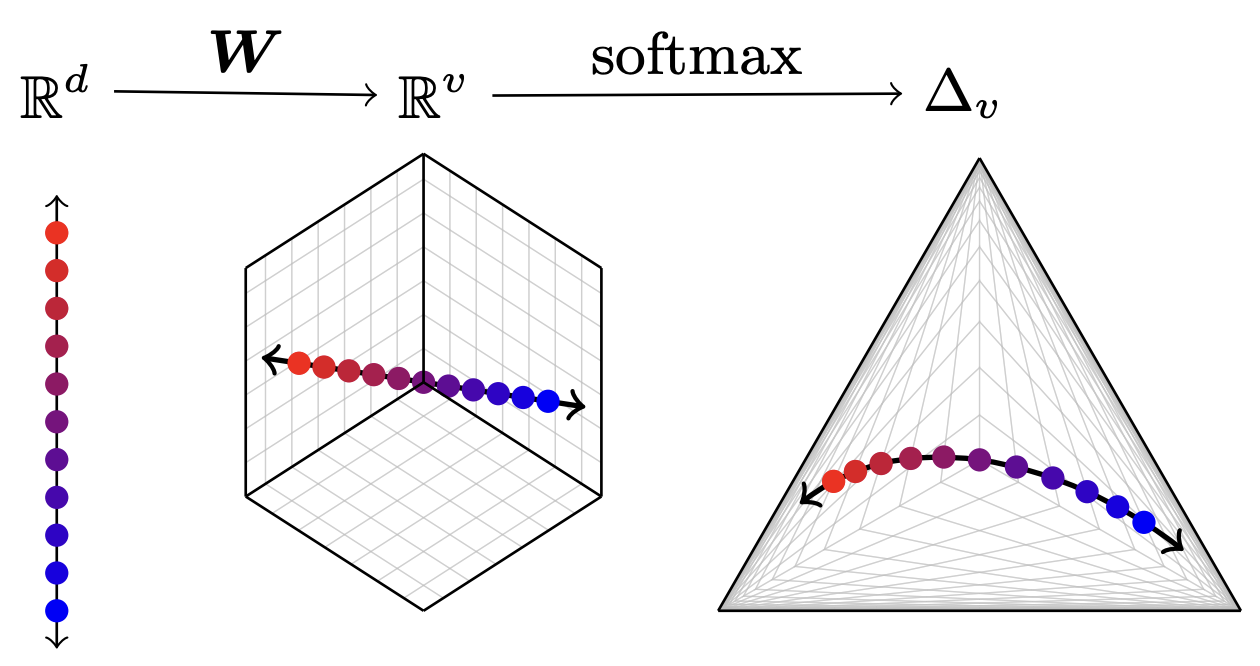

- Understanding the Mechanisms that Drive Language Technologies:

- Even the most reliable evaluation may not reveal much about the mechanisms driving powerful yet opaque models. What do model geometries reveal about the processes underlying our models, and how can we improve models through different designs? Are models by design limited to making some choices which can uniquely identify them?

-

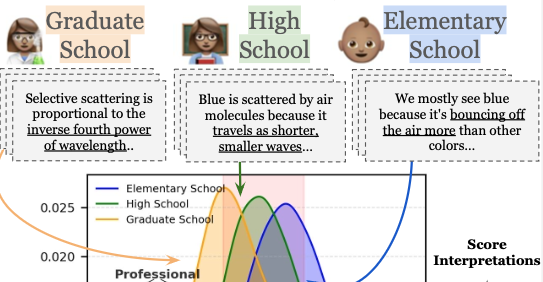

- Human and AI Collaboration:

- AI technologies are designed by humans and for humans, the future of AI involves cooperation and collaboration with humans. How can we say when a general-purpose model will reliably serve the custom utility for a human user? Where can these technologies complement human capabilities and where not?

These challenges require novel and creative techniques for redesigning generative evaluation to keep pace with model performance. This brings together a broad array of empirical research with theoretical fundamentals underlying language models.

news

| Apr 25, 2025 | Honored to receive a sponored research grant by Apple. |

|---|---|

| Apr 23, 2025 | DILL lab has newly minted entrepreneurs: Jaspreet Ranjit and Aryan Gulati are the Min Family Challenge winners in 2025. |

| Apr 23, 2025 | DILL Lab wins two awards at ShowCAIS 2025: best poster by undergrad Risha Surana and runner-up best oral presentation by Jaspreet Ranjit. |

| Apr 08, 2025 | DILL Lab students, Matt Finlayson and Ryan Wang (who’s joining UC Berkeley soon) got the NSF Graduate Research Fellowship this year! |

| Mar 31, 2025 | Co-organizing The Futures of Language Models and Transformers this week with Sasha Rush, as part of the Special Program on LLMs (Part 2). |

selected publications

See here for a full list.- arXiv

- NeurIPSProc. of NeurIPS, 2025

- CoLMConference on Language Modeling, 2025

- NeurIPSEAAMO / NeurIPS Workshop on GenAI for Health, 2025

- ACL

Findings of ACL, 2025

Findings of ACL, 2025 - ACL

- EMNLP

In Findings of EMNLP , 2024

In Findings of EMNLP , 2024 - COLM

- ICMLIn Proc. of ICML , 2022

- NeurIPS

- EMNLPIn Proc. of EMNLP , 2020

- ACL

- NAACLIn Proc. of NAACL , 2018